Universität Bielefeld › Technische Fakultät › NI

Search

Cognitive Robotics

Cognitive Robotics draws from classical robotics, artificial intelligence, cognitive science and neurobiology to elucidate and synthesize aspects of action-oriented intelligence. Using a robot system with a multi-fingered manipulator and an active binocular camera head we investigate strategies how to coordinate the actions of such system with those of a human partner. We focus on dextrous manipulation of objects, combining tactile and visual sensing, the joining of action primitives into action sequences and the development of learning algorithms.

The NeuTouch ITN aims at improving artificial tactile systems, by training a new generation of researchers that study how human and animal’s tactile systems work, develop a new type of technology that is based on the same principles, and use this technology for building robots that can help humans in daily tasks and artific

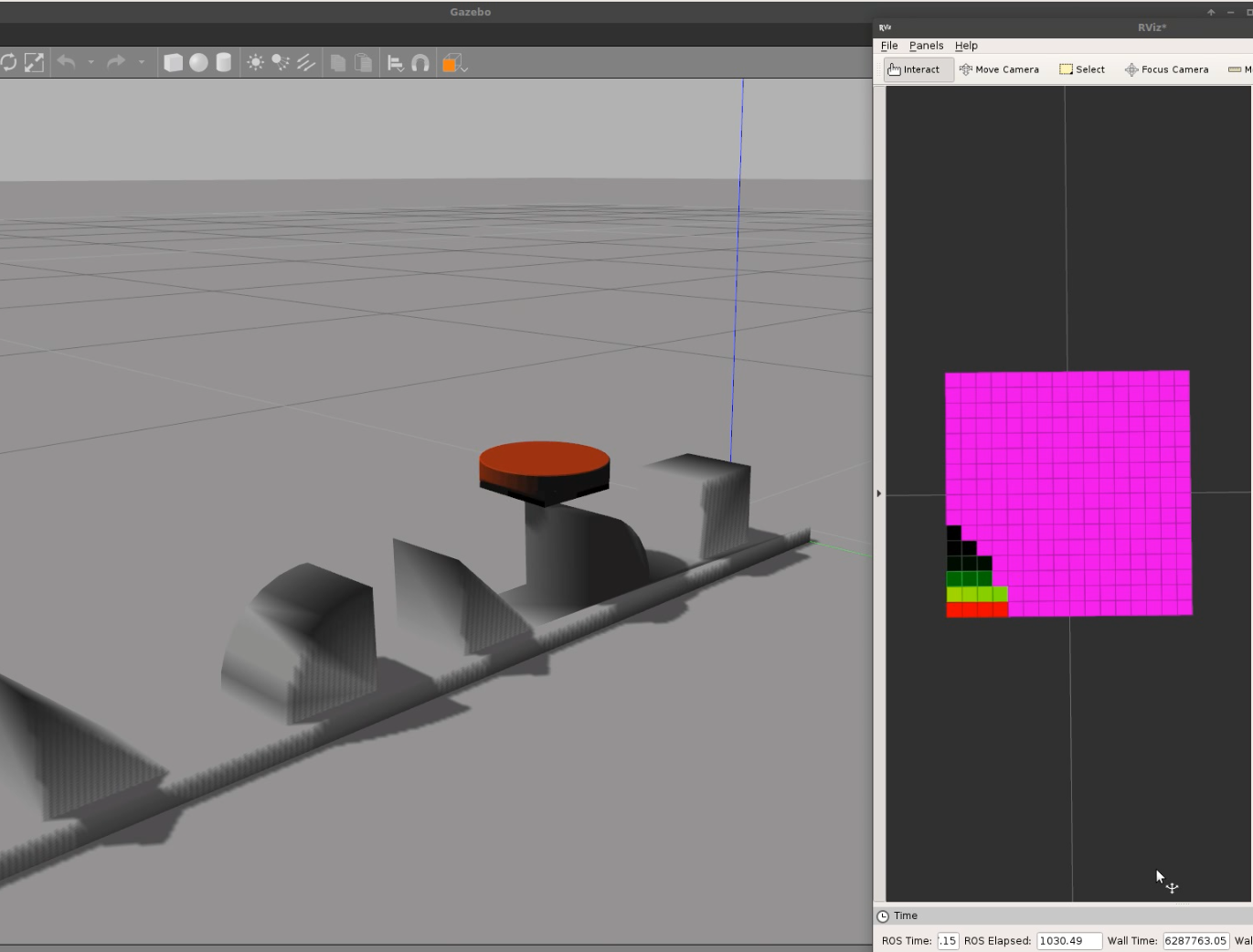

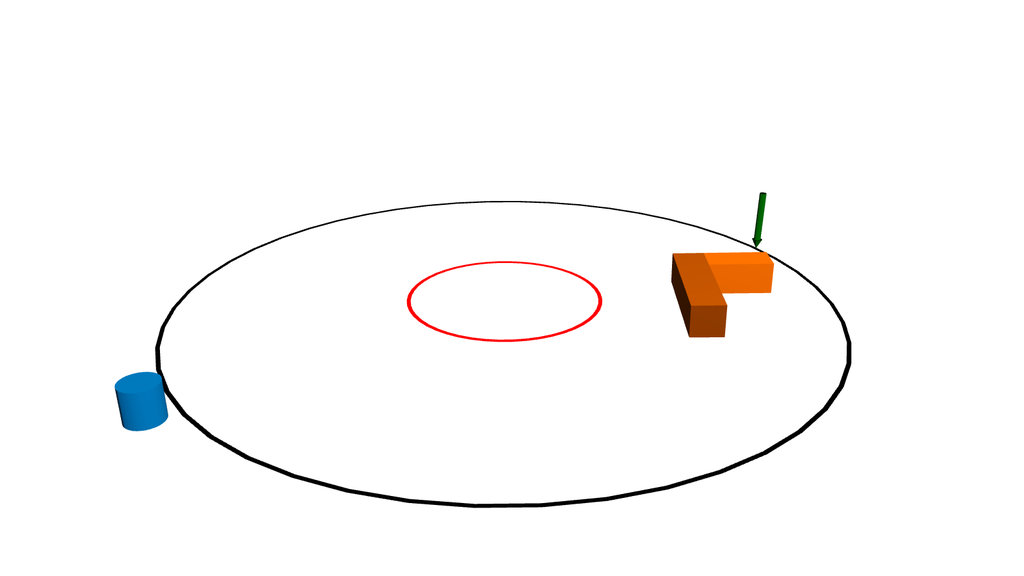

The NeuTouch ITN aims at improving artificial tactile systems, by training a new generation of researchers that study how human and animal’s tactile systems work, develop a new type of technology that is based on the same principles, and use this technology for building robots that can help humans in daily tasks and artific Understanding and reasoning about physics is an important ability of intelligent agents. We are developing an AI agent capable of solving physical reasoning tasks. If you would like to know more about this project/thesis opportunity, check the websites [1][2] or contact Dr. Andrew Melnik.

Understanding and reasoning about physics is an important ability of intelligent agents. We are developing an AI agent capable of solving physical reasoning tasks. If you would like to know more about this project/thesis opportunity, check the websites [1][2] or contact Dr. Andrew Melnik. In the project REBA+, funded within DFG priority program "Autonomous Learning", we develop, implement and evaluate rich extensions of a robot's body schema, along with learning algorithms that use these representations as strong priors in order to enable rapid and autonomous usage of tools and a flexible coping with novel mechanical linkages between the body, the grasped tool and target objects.

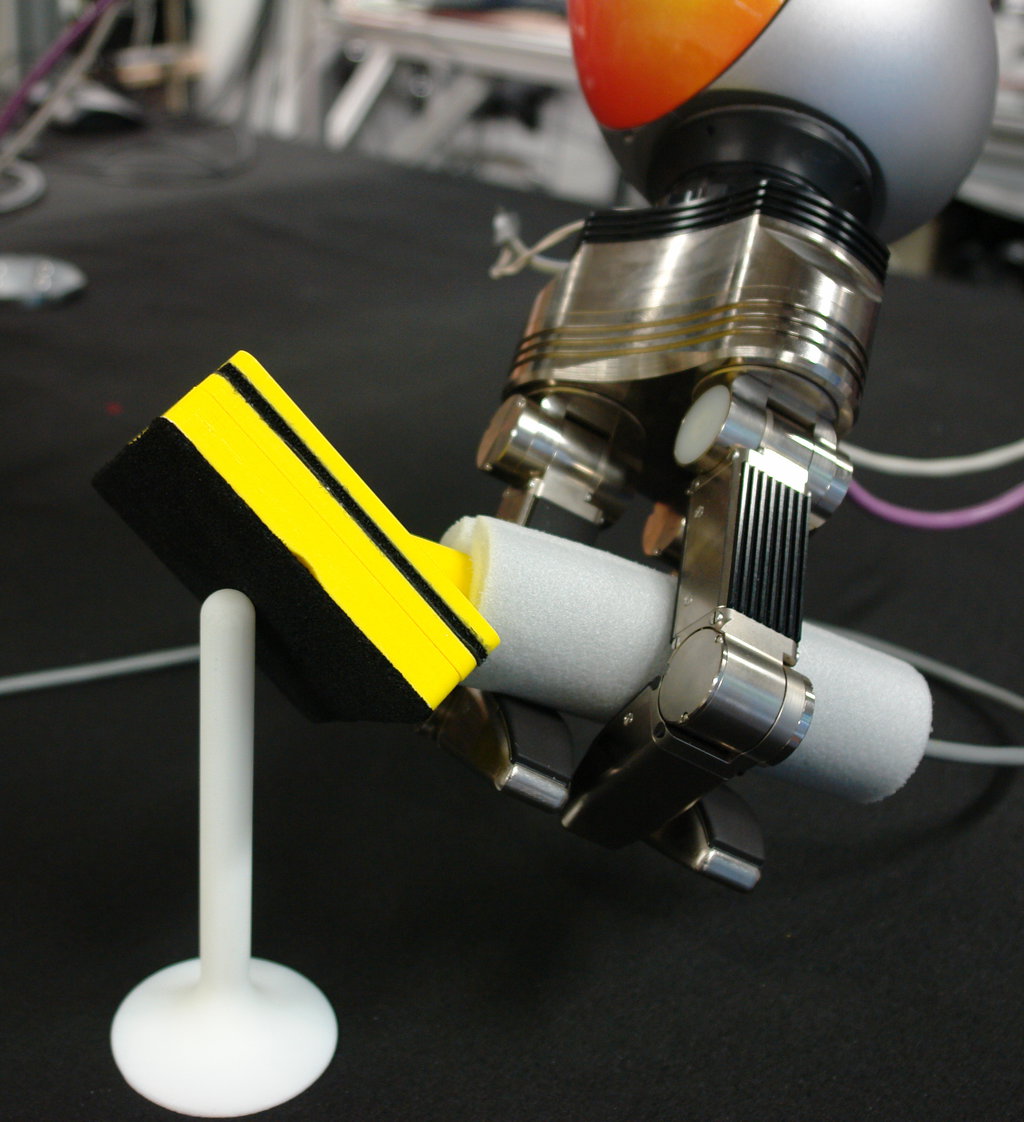

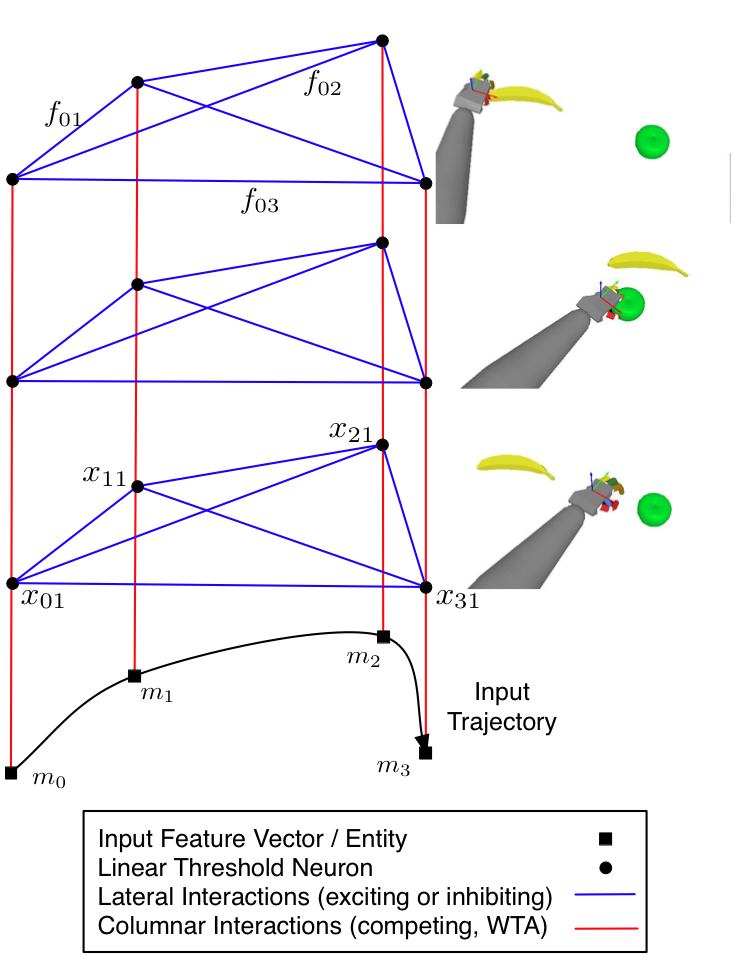

In the project REBA+, funded within DFG priority program "Autonomous Learning", we develop, implement and evaluate rich extensions of a robot's body schema, along with learning algorithms that use these representations as strong priors in order to enable rapid and autonomous usage of tools and a flexible coping with novel mechanical linkages between the body, the grasped tool and target objects.  Because of the complex anatomy of the human hand, in the absence of external constraints a large number of postures and force combinations can be used to attain a stable grasp.

Because of the complex anatomy of the human hand, in the absence of external constraints a large number of postures and force combinations can be used to attain a stable grasp. Which relations exist between properties of animals or people and their kinematic patterns? For example, can we tell, who performed a hand-over of which kind of object under which conditions just by looking at the sequence of joint angles? We try to find answers to these questions by employing a 3D motion tracking system.

Which relations exist between properties of animals or people and their kinematic patterns? For example, can we tell, who performed a hand-over of which kind of object under which conditions just by looking at the sequence of joint angles? We try to find answers to these questions by employing a 3D motion tracking system.

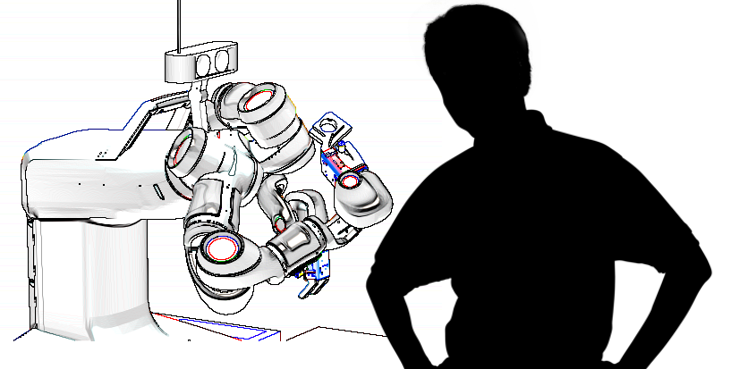

The SARAFun project has been formed to enable a non-expert user to integrate a new bi-manual assembly task on a robot in less than a day. This will be accomplished by augmenting the robot with cutting edge sensory and cognitive abilities as well as reasoning abilities required to plan and execute an assembly task.

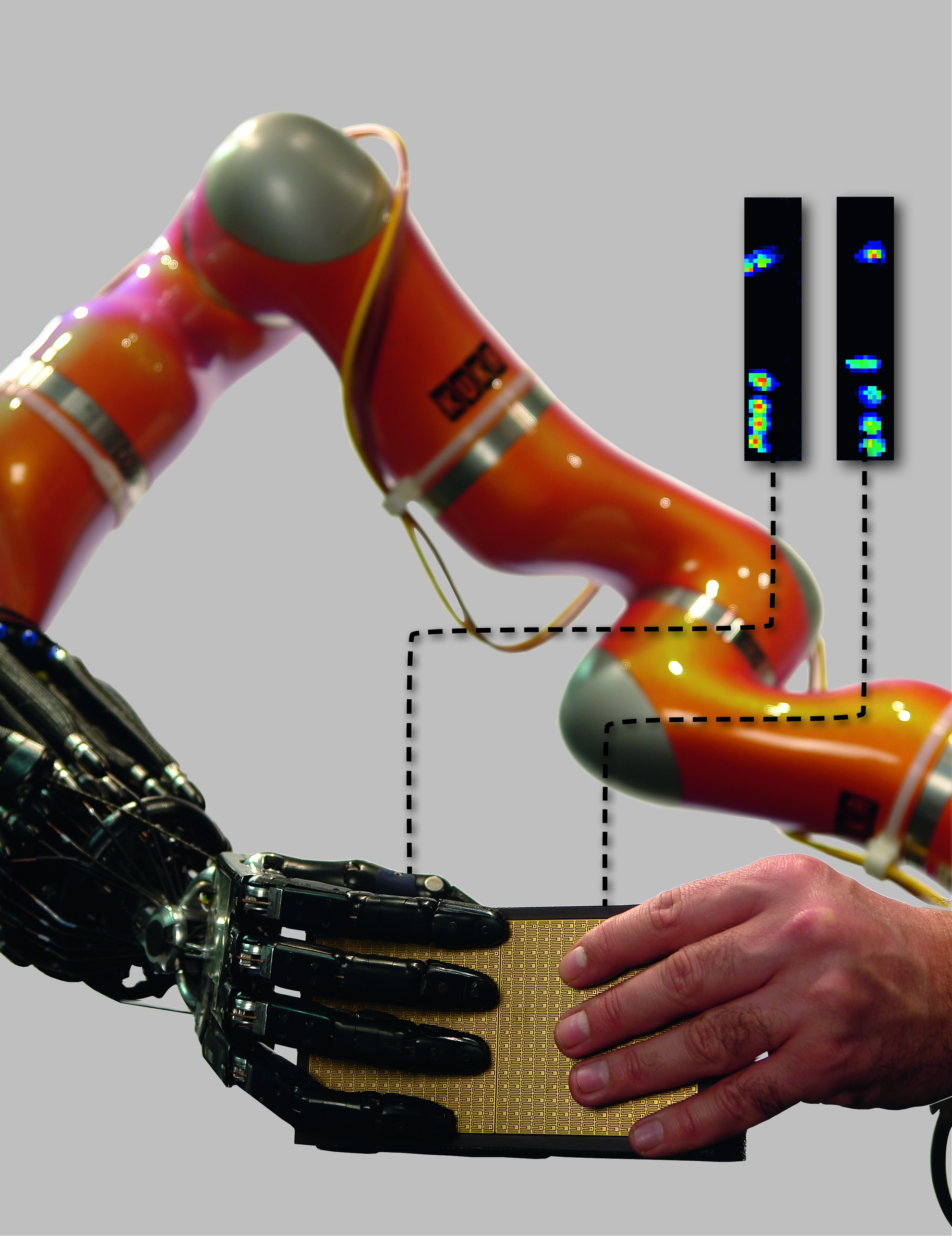

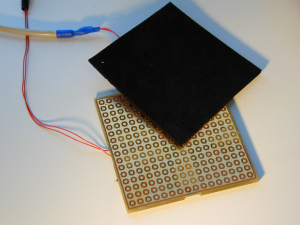

The SARAFun project has been formed to enable a non-expert user to integrate a new bi-manual assembly task on a robot in less than a day. This will be accomplished by augmenting the robot with cutting edge sensory and cognitive abilities as well as reasoning abilities required to plan and execute an assembly task. This 16x16 tactile sensor array is our workhorse for tactile-based robotics research. Providing 256 taxels on a grid of size 8cm x 8cm, we have a spatial resolution of 5mm. The modular design allows to stitch individual modules together to yield a larger tactile-sensitive surface.

This 16x16 tactile sensor array is our workhorse for tactile-based robotics research. Providing 256 taxels on a grid of size 8cm x 8cm, we have a spatial resolution of 5mm. The modular design allows to stitch individual modules together to yield a larger tactile-sensitive surface. Clothing provides a challenging test domain for research in the field of cognitive robotics. On the one hand, robots have to make use of commonsense knowledge to be able to understand the socially constructed meaning and function of garments. On the other hand, the variance resulting from deformations and differences between individual items of clothing calls for implicit representations which have to be learned from experience. Our robot uses topological, geometric, and subsymbolic knowledge representations for the manipulation of clothes with its anthropomorphic hands.

Clothing provides a challenging test domain for research in the field of cognitive robotics. On the one hand, robots have to make use of commonsense knowledge to be able to understand the socially constructed meaning and function of garments. On the other hand, the variance resulting from deformations and differences between individual items of clothing calls for implicit representations which have to be learned from experience. Our robot uses topological, geometric, and subsymbolic knowledge representations for the manipulation of clothes with its anthropomorphic hands.

The goal of this project is to investigate the principles underlying co-evolution of a body shape and its neural controller. As a specific model system, we consider a robot hand that is controlled by a neural network. In contrast to existing work, we focus on the genetic regulation of neural circuits and morphological development. Our interest is directed at a better understanding of the facilitatory potential of co-evolution for the emergence of complex new functions, the interplay between development and evolution, the response of different genetic architectures to changing environments, as well as the role of important boundary constraints, such as wiring and tissue costs.

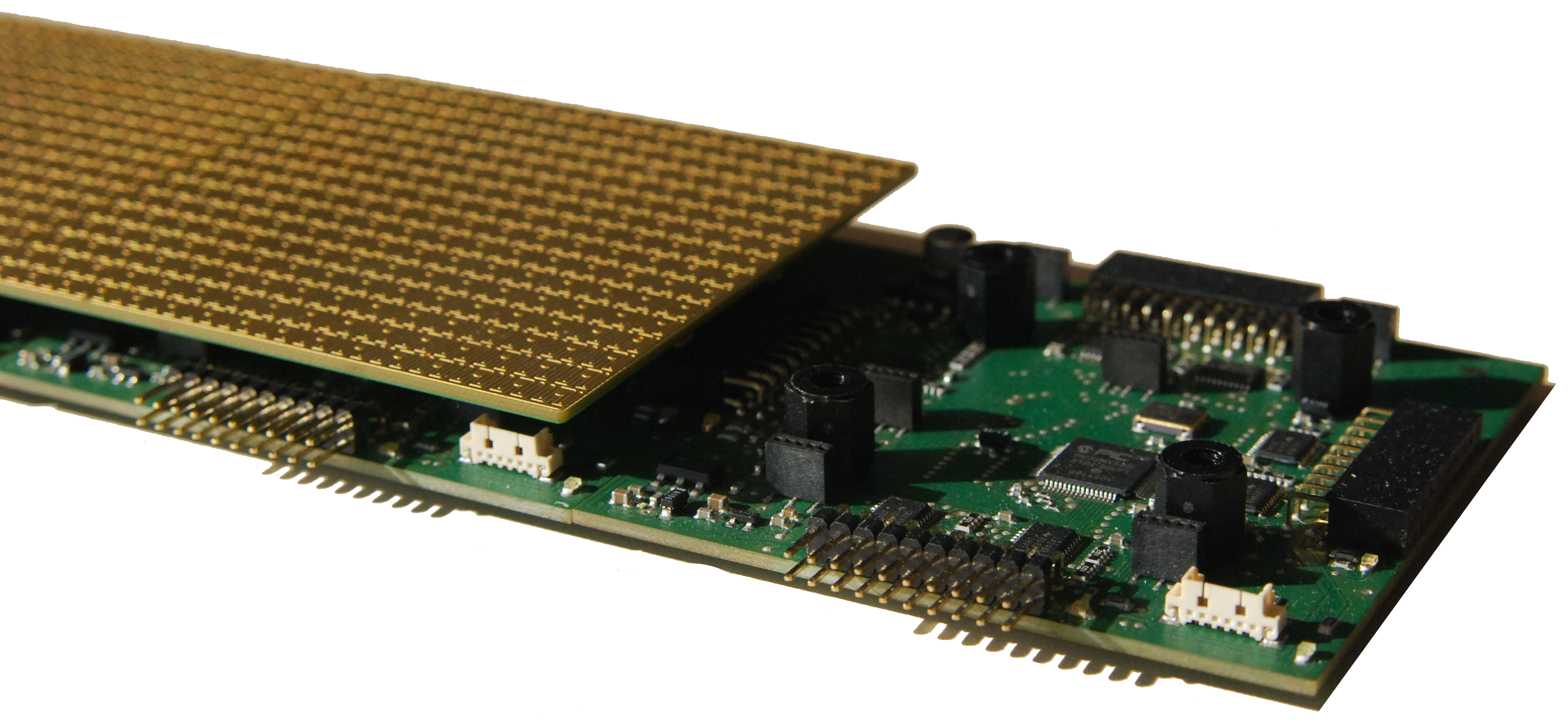

The goal of this project is to investigate the principles underlying co-evolution of a body shape and its neural controller. As a specific model system, we consider a robot hand that is controlled by a neural network. In contrast to existing work, we focus on the genetic regulation of neural circuits and morphological development. Our interest is directed at a better understanding of the facilitatory potential of co-evolution for the emergence of complex new functions, the interplay between development and evolution, the response of different genetic architectures to changing environments, as well as the role of important boundary constraints, such as wiring and tissue costs. The spatio-temporal contact pattern during manipulation is a valuable source of information about object identity and object state, especially in uncertain environments. Using a bimanual robot manipulator setup with two 256 "pixel" touch sensor arrays, the present project is creating a "haptic pattern database" and investigates machine learning techniques to analyse the information contents of different haptic features and to extract identity and state information from haptic patterns. A closely connected goal are dynamic control strategies for contact movements with deformable or plastic objects, such as clay.

The spatio-temporal contact pattern during manipulation is a valuable source of information about object identity and object state, especially in uncertain environments. Using a bimanual robot manipulator setup with two 256 "pixel" touch sensor arrays, the present project is creating a "haptic pattern database" and investigates machine learning techniques to analyse the information contents of different haptic features and to extract identity and state information from haptic patterns. A closely connected goal are dynamic control strategies for contact movements with deformable or plastic objects, such as clay. Manipulation of paper is a rich domain of manual intelligence that we encounter in many daily tasks. The present project attempts to analyse and implement the "web" of visuo-motor coordination skills to endow an anthropomorphic robot hand with the ability to manipulate paper (and paper-like objects) in a variety of situations of increasing complexity. This will include aspects such as modeling interaction with compliant objects, action based representation as well as bimanual coordination to enable object transformations such as tearing and folding.

Manipulation of paper is a rich domain of manual intelligence that we encounter in many daily tasks. The present project attempts to analyse and implement the "web" of visuo-motor coordination skills to endow an anthropomorphic robot hand with the ability to manipulate paper (and paper-like objects) in a variety of situations of increasing complexity. This will include aspects such as modeling interaction with compliant objects, action based representation as well as bimanual coordination to enable object transformations such as tearing and folding.

Unlike most existing approaches to the grasp selection task for anthropomorphic robot hands, this vision-based project aims for a solution, which does not depend on an a-priori known 3D shape of the object. Instead it uses a decomposition of the object view (obtained from mono or stereo cameras) into local, grasping-relevant shape primitives, whose optimal grasp type and approach direction are known or learned beforehand. Based on this decomposition a list of possible grasps can be generated and ordered according to the anticipated overall grasp quality.

Unlike most existing approaches to the grasp selection task for anthropomorphic robot hands, this vision-based project aims for a solution, which does not depend on an a-priori known 3D shape of the object. Instead it uses a decomposition of the object view (obtained from mono or stereo cameras) into local, grasping-relevant shape primitives, whose optimal grasp type and approach direction are known or learned beforehand. Based on this decomposition a list of possible grasps can be generated and ordered according to the anticipated overall grasp quality.

What principles enable rapid and adaptive alignment in coordination?

What principles enable rapid and adaptive alignment in coordination?