Universität Bielefeld › Technische Fakultät › NI

Search

Representation of manual actions for adaptive alignment in human-robot-cooperation

Priming of relevant motor degrees of freedom to achieve rapid alignment of motor actions can be conceptualised as the rapid selection of low-dimensional action manifolds that capture the essential motor degrees of freedom. The present project investigates the construction of such manifolds from training data and how observed action trajectories can be decomposed into traversals of manifolds from a previously acquired repertoire. To this end we focus on manual actions of an anthropomorphic hand and combine Unsupervised Kernel Regression (UKR, a recent statistical learning method) with Competitive Layer Models (CLM, a recurrent neural network architecture) to solve the tasks of manifold construction and dynamic action segmentation.

Recording manual actions

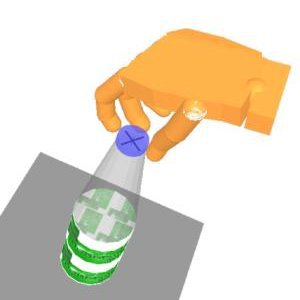

In the initial preparatory phase of the project, we needed to generate manual data from human demonstrations as a basis for the subsequent project work. As input device, we utilised a hand-mounted dataglove. With the aid of a two-step procedure, we achieved to robustly map manual demonstrations in form of dataglove sensor readings onto an intermediate human-like hand model and finally onto our robotic hand - the Shadow Dextrous Hand. As first manual action to investigate, we chose the movement of turning a bottle cap and therefore, we recorded several sequences of corresponding hand postures for different cap radii.

Construction of low-dimensional action manifolds

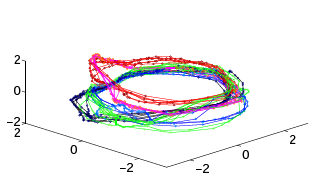

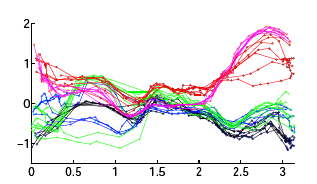

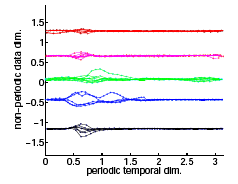

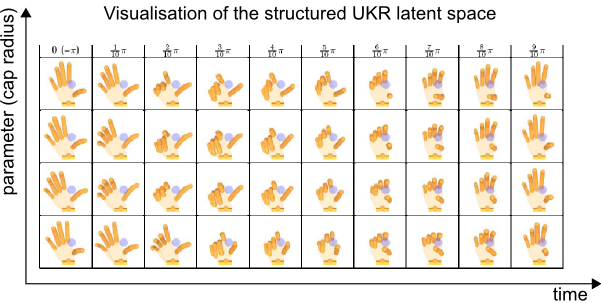

Starting from our earlier work on dextrous grasping and Grasp Manifolds , we proposed a related representation for manipulation movements or dextrous manipulation, respectively. The main idea is to construct manifolds embedded in the finger joint angle space which represent the subspace of hand postures associated with the targeted manipulation movement. In contrast to our grasping approach, we now aim at representing sequences of hand postures corresponding to whole movement cycles instead of single final grasp postures in the manifold. To this end, we used Unsupervised Kernel Regression, a recent approach to non-linear manifold learning and provided some extensions to this approach to enable the method to represent (periodic) sequences of human motion capture data in a highly structured way.

|

|

|

| Isomap embedding of the cap turning data | Initialisation of UKR's latent space | Latent space after training |

More specific, we designed these manifolds such that specific movement parameters - and especially the advance in time - are explicitly represented by distinct manifold dimensions. Once successfully generated, such structured manifolds allow for very easy and purposive navigation within the manifold and thus to reproduce or synthesise unseen movements of the represented class .

For a short demonstration, please visit the (external) movie page.

Current work: Dynamic action segmentation

Our current work deals with the segmentation of demonstrated action sequences. We therefore combined the Competitive Layer Models (CLM) with the above presented Manipulation Manifolds or their underlying method of Unsupervised Kernel Regression (UKR) such that each layer of the CLM corresponds to one specific, previously trained UKR movement manifold of the covered repertoire. The CLM dynamics then decomposes the presented sequence of motion data by assigning each trajectory point to the CLM layer associated with the "best matching" UKR manifold. As "best matching" UKR manifold, we denote that one which would be best capable to reproduce the presented trajectory point in the presented context ("trajectory data history/neighbourhood").