Universität Bielefeld › Technische Fakultät › NI

Search

Imitation Learning

In the near future, more and more people will need assistance in everyday tasks while they still want to maintain a high degree of self-reliance. Cognitive robot servants that are easy to use and that can safely operate in household environments will fulfill those individual needs. However, programming a robot to achieve an individual task is a complex problem, which usually requires expert programmers with high labor costs. In order to serve a mass market, programming costs for household robots must decrease. One promising way to achieve this is to equip cognitive robots with task learning abilities, that lets them learn a task from demonstrations of naive (non-expert) users. This paradigm is widely known as Programming by Demonstration (PbD) or Imitation Learning.

In the near future, more and more people will need assistance in everyday tasks while they still want to maintain a high degree of self-reliance. Cognitive robot servants that are easy to use and that can safely operate in household environments will fulfill those individual needs. However, programming a robot to achieve an individual task is a complex problem, which usually requires expert programmers with high labor costs. In order to serve a mass market, programming costs for household robots must decrease. One promising way to achieve this is to equip cognitive robots with task learning abilities, that lets them learn a task from demonstrations of naive (non-expert) users. This paradigm is widely known as Programming by Demonstration (PbD) or Imitation Learning.

Observation of Human Actions

In order to obtain observations of human task demonstrations, we use an Immersion Cyberglove that senses the finger joint angles in order to capture the human hand posture. Additionally, we use ARToolKit Markers mounted on the back of the hands with elastic straps to record the position of hands in 6D-space (3 translational and rotationsl Degrees of Freedom). A overhead camera tracks the scene from an overview perspective. This setting allows us to locate a person's hand in 6D-space (from the ARTookKilt Marker) together with its posture (from the Cyberglove sensor readings) and estimate the actions the user performs. For additional information on object locations we use a state-of-the-art object tracking system based on color histogramms.

In order to obtain observations of human task demonstrations, we use an Immersion Cyberglove that senses the finger joint angles in order to capture the human hand posture. Additionally, we use ARToolKit Markers mounted on the back of the hands with elastic straps to record the position of hands in 6D-space (3 translational and rotationsl Degrees of Freedom). A overhead camera tracks the scene from an overview perspective. This setting allows us to locate a person's hand in 6D-space (from the ARTookKilt Marker) together with its posture (from the Cyberglove sensor readings) and estimate the actions the user performs. For additional information on object locations we use a state-of-the-art object tracking system based on color histogramms.

Task Segmentation

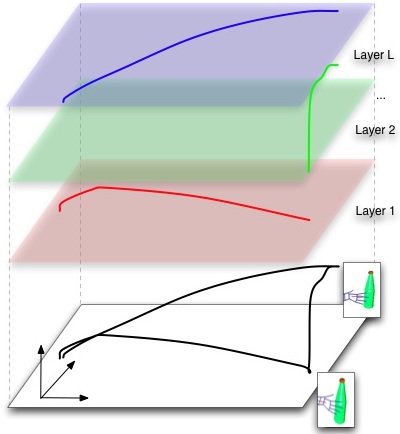

We developed a general framework and methodology for the decomposition of a task demonstration into its constituting subtasks using the Neural Method of the Competitive Layer Model (CLM) . We extended the psychological theory of perceptual grouping via gestalt rules from the domain of visual patterns into the domain of spatio-temporal processes arising from actions. We explored the power of Gestalt laws for characterizing good action primitives for task decomposition. Such an approach can connect the so far primarily perception-oriented Gestalt approach with more current ideas on the pivotal role of the action-perception loop for representing and decomposing interactions. We applied this method to the segmentation of action primitives from motion trajectories. Gestalt principles implemented as "field-like" interactions that are learnt within a layered neural network (CLM) from data drive a dynamic process that seperates trajectories at incontinuities and assigns different segments to different layers of neural units.

We developed a general framework and methodology for the decomposition of a task demonstration into its constituting subtasks using the Neural Method of the Competitive Layer Model (CLM) . We extended the psychological theory of perceptual grouping via gestalt rules from the domain of visual patterns into the domain of spatio-temporal processes arising from actions. We explored the power of Gestalt laws for characterizing good action primitives for task decomposition. Such an approach can connect the so far primarily perception-oriented Gestalt approach with more current ideas on the pivotal role of the action-perception loop for representing and decomposing interactions. We applied this method to the segmentation of action primitives from motion trajectories. Gestalt principles implemented as "field-like" interactions that are learnt within a layered neural network (CLM) from data drive a dynamic process that seperates trajectories at incontinuities and assigns different segments to different layers of neural units.

Action Sequences

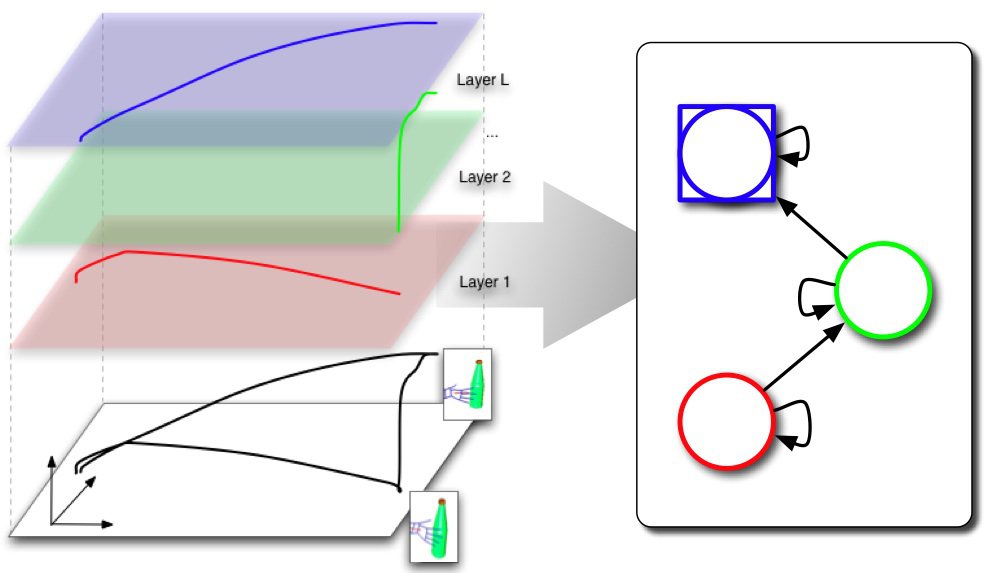

We are working on methods for the selection, parameterization and learning of action primitives from a sequence of observed segments. Each of these segments corresponds to a subtask with a specific subgoal, which all must be fulfilled sequentially in order to achieve the overall task's goal. Automotically extracting programs from the user demonstrations and abstracting and encoding them in so called Hierarchical State Machines (HSMs) is a further research topic.

We are working on methods for the selection, parameterization and learning of action primitives from a sequence of observed segments. Each of these segments corresponds to a subtask with a specific subgoal, which all must be fulfilled sequentially in order to achieve the overall task's goal. Automotically extracting programs from the user demonstrations and abstracting and encoding them in so called Hierarchical State Machines (HSMs) is a further research topic.

- Login to post comments