Universität Bielefeld › Technische Fakultät › NI

Search

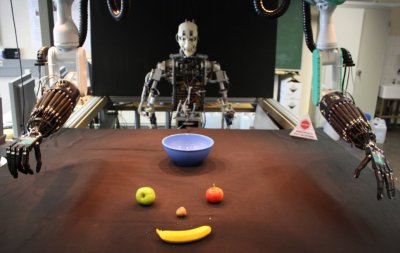

Vision-based Grasping

The target of this project is the development of a generic decomposition framework for affordance-based grasping using anthropomorphic robot hands. Unlike existing approaches to the grasp selection task, a solution is aimed for, which does not depend on an a-priori known 3D shape of the object. Instead it uses a decomposition of the object view (obtained from mono or stereo cameras) into local, grasping-relevant shape primitives, whose optimal grasp type and approach direction are known or learned beforehand. Based on this decomposition a list of possible grasps can be generated and ordered according to the anticipated overall grasp quality.

The fundamental idea of this process is the association of local shape features (i.e. object parts) with functional data (e.g. position of fingertips / hand posture, relative distance object - hand). In this way an optimization process (grasp selection), which incorporates previously acquired experience, can be boosted with respect to an overall cost function.

A second application area for the generic decomposition framework beside grasping is design conception and optimization. Here it is also very advantageous to extract local knowledge on shape primitives and how they influence the overall design quality. On this basis, new design conceptions could be generated by combining different desired local performance contributions and selecting the associated shape primitives accordingly.

- Login to post comments