Universität Bielefeld › Technische Fakultät › NI

Search

Autonomous Exploration of Manual Interaction Space

We gradually increase our manual competence by exploring manual interaction spaces for many different kinds of objects. This is an active process that is very different from passive perception of "samples". The availability of humanoid robot hands offers the opportunity to investigate different strategies for such active exploration in realistic settings. In the present project, the investigation of such strategies shall be pursued from the perspective of „multimodal proprioception:“ correlating joint angles, partial contact information from touch sensors and joint torques as well as visual information about changes in finger and object position in such a way as to make predictions about "useful aspects" for shaping the ongoing interaction.

To make this very ambitious goal approachable within the resource bounds of a single project, we will focus on an interesting and important specific case of manual interaction spaces: „visually supervised object-in-hand manipulation“. More particularly, one could consider rotating an object, e.g. a cube, within the hand such that certain faces become visible one after the other.

This project crucially involves the need to combine visual information with proprioceptive feedback when the fingers explore the faces and edges of the object. A major goal of the project would be to implement a "vertical slice" of exploratory skills, ranging from low level finger control and visual perception of the contact state of each finger, chunking a limited set of action primitives, and planning short action sequences.

Generic insights should be about how visual and haptic information has to be combined to drive the exploration process and about suitable principles for shaping the exploration, such as reinforcement learning, active learning driven by information maximization, imitation of previously learned episodes (instead of statistical learning).

General Object Local Manipulation based on the reactive control.

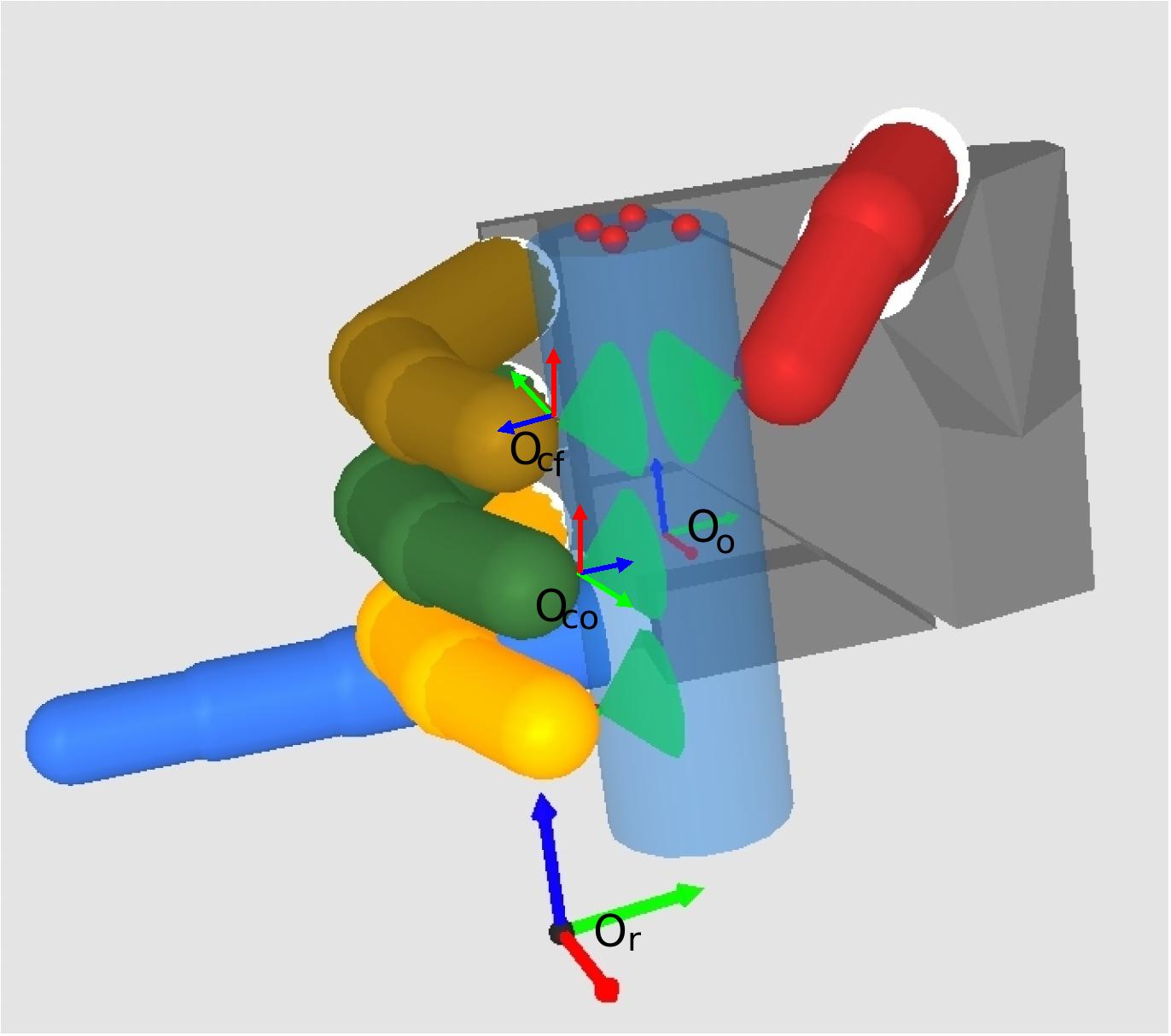

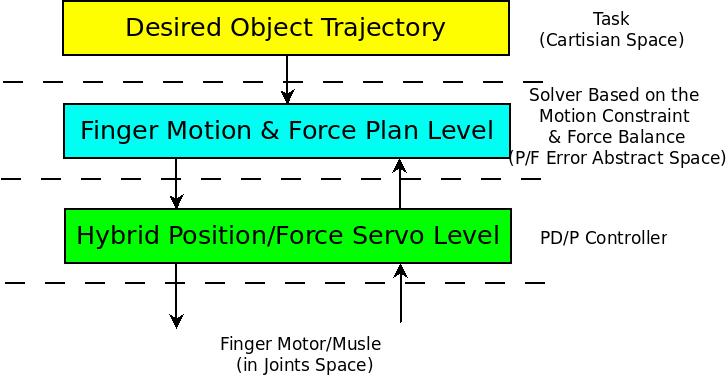

The authors proposed a novel reactive strategy to solve a general object local manipulation problemwith an multifingered robot hand based on the vision and tactile feedback. In this method, the micro manipulation assumption is proposed and the object can be manipulated within the robot hand workspace without the active and explicit controlling about the rolling and sliding of the fingertips on the object. A 6 D.O.F reactive controller based on the hybrid of the contact force and the contact position feedback is developed. A three layer hierarchical control structure is employed to implement this manipulation strategy. The simulation experiment is run based on the physics engine-Vortex, to show the feasibility of this method.

Figure 1. Dexterous Manipulation

Figure 2. Hybrid Position and Force Control Structure

Please feel free to download and watch the Attached the general object dexterous manipulation simulation Download Video

Manipulation of Unknown Rotary Object by Static Finite State Machine

We propose a simple but efficient control strategy to manipulate objects of unknown shape, weight, and friction properties -- prerequisites which are necessary for classical offline grasping and manipulation methods. With this strategy, the object can be manipulated in hand in a large scale,(eg. to rotate the object 360 degree) regardless whether there is rolling or sliding motion between the fingertips and object. The proposed control strategy employs estimated contact point locations, which can be obtained from modern tactile sensors with good spatial resolution. The feasibility of the strategy is proven in simulation experiments employing a physics engine providing exact contact information. However, to motivate the applicability in real world scenarios, where only coarse and noisy contact information will be available, we also evaluated the performance of the approach when adding artificial noise.

Regrasp Point Optimization

Unknown object manipulation in hand is a challenge task in multifingered robot hand manipulation community. We have shown a simple but efficient two stages manipulation strategy feasibility--"global finger gaits planning , local object manipulation plan and control". Local plan and control is in charge of moving the object in the robot hand workspace limitation, and the global planner is in charge of managing the fingers switch to facilitate the new cycle local manipulation.

This paper contributes on how to autonomously bridge these two planners by an autonomous regrasp point optimization select algorithm, which will combine the grasp quality and manipulability as the global objective function. This algorithm depends on multifingered-hand coordinately self-exploration manipulation. By planing contact points movement and observing the implementation result, the optimal regrasp points can be obtained. Thanks to the dexterous manipulation control basis, we can realize three passive finger grasping the object stably and one active finger exploring the unknown object surface to find the optimal regrasp point task.

The feasibility of the regrasp point selection algorithm is proven in simulation experiments employing a physics engine providing exact contact information (position,normal vector and contact force). In order to motivate the applicability in real world scenarios, where only coarse and noisy contact information will be available, we also evaluated the performance of the approach when adding artificial noise.