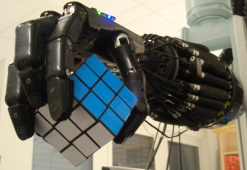

We gradually increase our manual competence by exploring manual interaction spaces for many different kinds of objects. This is an active process that is very different from passive perception of "samples". The availability of humanoid robot hands offers the opportunity to investigate different strategies for such active exploration in realistic settings. In the present project, the investigation of such strategies shall be pursued from the perspective of „multimodal proprioception:“ correlating joint angles, partial contact information from touch sensors and joint torques as well as visual information about changes in finger and object position in such a way as to make predictions about "useful aspects" for shaping the ongoing interaction.

We gradually increase our manual competence by exploring manual interaction spaces for many different kinds of objects. This is an active process that is very different from passive perception of "samples". The availability of humanoid robot hands offers the opportunity to investigate different strategies for such active exploration in realistic settings. In the present project, the investigation of such strategies shall be pursued from the perspective of „multimodal proprioception:“ correlating joint angles, partial contact information from touch sensors and joint torques as well as visual information about changes in finger and object position in such a way as to make predictions about "useful aspects" for shaping the ongoing interaction.

read more »