Universität Bielefeld › Technische Fakultät › NI

Search

Action Recognition

The visual detection and recognition of human actions by technical systems is a fundamental problem with many applications the human-computer interaction domain. Activities involving hand-object interactions and action sequences in goal-oriented tasks, such as manufacturing work, pose a particular challenge. We use deep learning to detect and recognize such actions in real-time, and we propose an assistance system that provides the user with feedback and guidance based on the recognized actions.

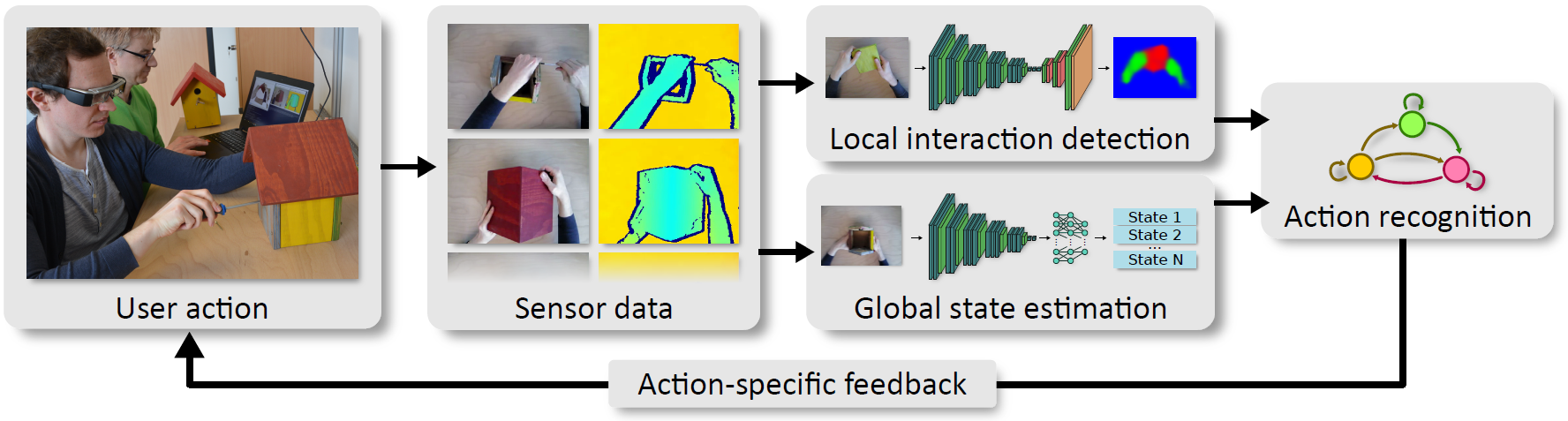

User actions captured by a static RGBD sensor are recognized in our system by combining information about local interactions and global progress extracted from the sensor data. We use convolutional neural networks to detect local hand-object interactions and to estimate the overall state in the activity progress. The user's action is recognized by combining this local and global information in a probabilistic state machine using Bayesian inference. Based on this recognition, the user is presented with action- and context-sensitive prompts in real-time, which provide assistance for performing the next step or recovering from errors.

Local hand-object interactions are detected in the sensor data using a fully convolutional neural network, which was trained to densely discriminate between hand and object pixels. The resulting dense pixel labeling is used to track the object using geometric registration. The current global state of the activity progress is estimated using another convolutional neural network, which computes a probability distribution over the known activity states. These per-frame estimations are contextualized in the overall activity using Bayesian inference in conjunction with a probabilistic activity state machine. Based on this action recognition, a context-sensitive feedback message is selected and presented to the user.