Universität Bielefeld › Technische Fakultät › NI

Search

Figure-ground segmentation

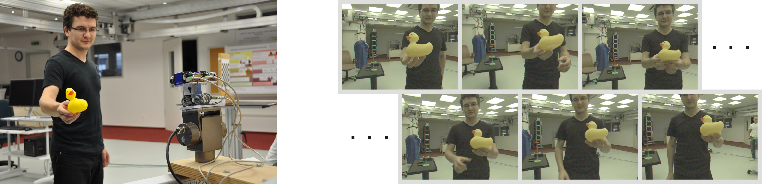

In the scientific field of cognitive robotics classical research topics are the construction of robotic systems, their sensory capabilities and the control of this hardware. Subject of current research is to endow artificial systems with a flexible and intelligent behavior in their environment. The development on cognitive systems is driven by the intention to construct a versatile robot that can be used in dynamically changing and even novel situations. The processing of visual information is an important component in this context, e.g.~for the visual localization, navigation and the recognition of physical objects in the environment. Relevant questions with respect to the acquisition of visual knowledge are how to represent a huge number of visual objects, how to discriminate between them and to recognize known objects also in new visual context. But sensory processing cannot be simply decoupled from the whole system and visual learning has to be investigated in the context of an interaction of the system with its environment or human tutors. To illustrate this, Fig. 1 displays a human-robot interaction showing the typical setup as well as the view from the system perspective. An unconstrained interaction can be characterized by a human tutor in front of a dynamically changing and cluttered background. The object of interest is showed by hand and is freely rotated during the presentation. To enable learning in such scenario the system has to determine where the behaviorally relevant parts of the scene are and which image regions belong to a particular physical entity.

|

| Fig. 1: The left image shows a typical human-robot interaction, where a human tutor presents an object to an artificial vision system. This scenario is unconstrained in the sense that learning and recognition takes place in a dynamical scene. That is, the tutor presents the object by hand from an arbitrary viewing position in front of a cluttered background. On the right, a short sequence of frames from the system perspective is presented, demonstrating the interactive scenario.

|

In human-machine interaction research, the learning of visual representation under such general environment conditions becomes increasingly important. The main goal is to reach a symbolic level for a compact unambiguous description of the visual data. Therefore the segmentation of objects from their surrounding background is relevant for object learning and recognition. Image segmentation is one of the most challenging tasks in computer vision and a crucial concept in multiple applications. A special case of image segmentation is figure-ground segmentation, which is the process that separates the image into two regions, the object of interest and the background clutter. This process serves as preprocessing step for machine learning techniques to separate the visual features of the object from the features occurring in the background. Regarding subsequent object learning, this step is necessary in order to determine the visual properties of objects, such as their shape for instance. Furthermore a figure-ground segmentation allows the application of object recognition methods in unconstrained environment with cluttered background and increases their efficiency by constraining the computation to the relevant location of the image. In other words, a figure-ground segmentation separates the object identity and the location in the scene i.e. achieves invariance to the stimulus position.

A hypothesis-based approach

A hypothesis-based approach

In the initial learning phase we cannot assume an already acquired object-representation, i.e. the appearance of the objects is unknown. In this case an external cue is necessary to guide the attention of the system to a particular location in the scene in order to bootstrap the learning procedure. We use a hypothesis-based concept where such segmentation cue can be generated by a bottom-up saliency or cues like motion and stereo disparity to obtain the relevant object regions. But these methods only partially address the question what is related to a certain object or physical entity. The cues provided by motion and depth estimation are hard to estimate on homogenously colored regions and therefore may be only partially available. Additionally depth estimation can only give a coarse approximation of the object outline due to the ill-posed task to recover 3D information from 2D data. Therefore the obtained image regions only correspond very roughly to an object. The task of hypothesis-based figure-ground segmentation models is to assign each pixel to foreground or background on the basis of the initial cue and the input image. We investigate algorithms and methods to represent the properties of figure and ground regions and to obtain such pixel-wise decision which image parts are related to one of the two classes. We focus our investigation on vector-based neural networks and model figure and ground with prototypical feature representatives e.g. consisting of color and position information. Using such method, pixels are assigned to the most similar prototype which represents, according to the underlying metrics, the homogenous region of the image. Additionally we use metrics manipulations to improve the pixel-wise classifier for features. That is, the standard Euclidian distance function to measure the similarity of pixel-features and prototypes is replaced by more complex and adaptive distance functions.

- Login to post comments